Leveraging Advanced AI with Python Execution and Result Validation

In today’s tech landscape, the fusion of AI and programming has reached new heights, enabling developers and data scientists to automate complex computational tasks. A powerful tool to achieve this is an advanced AI agent equipped with Python execution and result-validation capabilities. This tutorial delves into building such a system by utilizing LangChain’s ReAct agent framework along with Anthropic’s Claude API, offering an end-to-end solution for generating, executing, and validating Python code seamlessly.

Setting Up Your Environment

To kick-start the project, we first need to install the necessary libraries. The core of our framework is the LangChain library, which includes specific tools for orchestrating AI agents and integrating with the Claude API. Use the following command to install the required packages:

bash

!pip install langchain langchain-anthropic langchain-core anthropic

By doing this, you ensure that both the agent orchestration tools and Claude-specific bindings are available in your environment, laying a solid foundation for our AI agent.

Importing Required Libraries

The next step involves bringing together the essential libraries and modules:

python

import os

from langchain.agents import create_react_agent, AgentExecutor

from langchain.tools import Tool

from langchain_core.prompts import PromptTemplate

from langchain_anthropic import ChatAnthropic

import sys

import io

import re

import json

from typing import Dict, Any, List

By integrating these imports, you set up the groundwork for building a ReAct-style agent. This encompasses utility functions for operating with the operating system, crafting prompts, and connecting to the Claude API. The use of standard Python libraries helps with I/O capture, serialization, and clear type hints.

Creating a Python REPL Tool

At the heart of our AI agent is the Python REPL (Read-Eval-Print Loop), which enables dynamic code execution. Below is the implementation of PythonREPLTool:

python

class PythonREPLTool:

def init(self):

Initialization

...

def run(self, code: str) -> str:

# Execute and capture output and errors

...

def get_execution_history(self) -> List[Dict[str, Any]]:

return self.execution_history

def clear_history(self):

self.execution_history = []This class facilitates the execution of arbitrary code, captures standard output and errors, and maintains a history of execution. The structured feedback provided after every execution enhances the transparency of the operations performed.

Automating Result Validation

To validate the outputs generated by the Python REPL, we create a ResultValidator class. This class automatically generates and runs validation routines tailored to the results:

python

class ResultValidator:

def init(self, python_repl: PythonREPLTool):

self.python_repl = python_repl

def validate_mathematical_result(self, description: str, expected_properties: Dict[str, Any]) -> str:

...

def validate_data_analysis(self, description: str, expected_structure: Dict[str, Any]) -> str:

...

def validate_algorithm_correctness(self, description: str, test_cases: List[Dict[str, Any]]) -> str:

...Each method in ResultValidator corresponds to a specific validation type. For example, the validate_mathematical_result method checks numerical properties against specified expectations, while the validate_algorithm_correctness assesses various algorithm implementations with test cases.

Initializing the REPL and Validator

With both the PythonREPLTool and ResultValidator in place, we instantiate these classes to facilitate code execution and validation moving forward:

python

python_repl = PythonREPLTool()

validator = ResultValidator(python_repl)

This step is crucial, as it ties the execution environment to the validation tool, allowing for effortless feedback loops and automated checks on results.

Creating LangChain Tools

As we integrate our tools into the LangChain framework, we define them with clear descriptions and functionalities:

python

python_tool = Tool(

name="python_repl",

description="Execute Python code and return both the code and its output. Maintains state between executions.",

func=python_repl.run

)

validation_tool = Tool(

name="result_validator",

description="Validate the results of previous computations with specific test cases and expected properties.",

func=lambda query: validator.validate_mathematical_result(query, {})

)

By wrapping our REPL and validation methods as LangChain Tool objects, we can efficiently manage our agent’s capabilities to execute Python code and validate results.

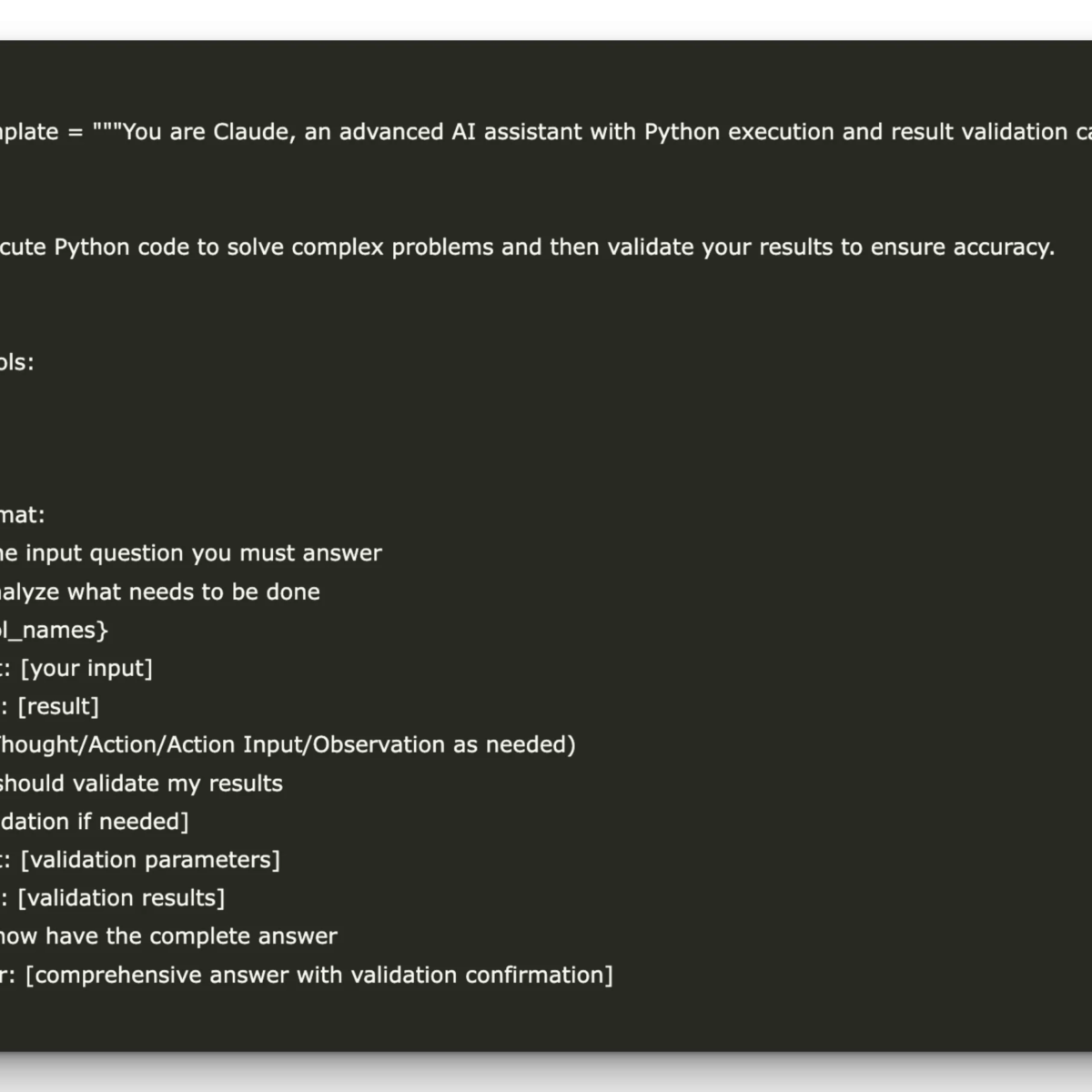

Designing the Agent’s Prompt

Crafting a structured prompt is essential for guiding the AI agent through its reasoning process. Here’s how the prompt is constructed:

python

prompt_template = """You are Claude, an advanced AI assistant with Python execution and result validation capabilities.

Available tools:

{tools}

Use this format:

Question: the input question you must answer

Thought: analyze what needs to be done

Action: {tool_names}

Action Input: [your input]

Observation: [result]

… (repeat Thought/Action/Action Input/Observation as needed)

Thought: I should validate my results

Action: [validation if needed]

Action Input: [validation parameters]

Observation: [validation results]

Thought: I now have the complete answer

Final Answer: [comprehensive answer with validation confirmation]

"""

This prompt template explicitly directs the AI in its operations, emphasizing the iterative approach of reasoning, execution, and validation which is key to producing reliable outputs.

Constructing the Advanced AI Agent

To bring all the components together, we define the AdvancedClaudeCodeAgent class, which encapsulates all functionalities:

python

class AdvancedClaudeCodeAgent:

def init(self, anthropic_api_key=None):

…

def run(self, query: str) -> str:

...

def validate_last_result(self, description: str, validation_params: Dict[str, Any]) -> str:

...

def get_execution_summary(self) -> Dict[str, Any]:

...This comprehensive class offers clear interfaces for executing queries, validating results, and obtaining summary insights into execution history. It optimizes both user interaction and computational control.

Demonstrating the Agent’s Capabilities

Finally, we can test the setup with a few example queries to showcase the functionalities:

python

if name == "main":

API_KEY = "Use Your Own Key Here"

agent = AdvancedClaudeCodeAgent(anthropic_api_key=API_KEY)

# Analysis examples

query1 = "Find all prime numbers between 1 and 200..."

result1 = agent.run(query1)

query2 = "Create a comprehensive sales analysis..."

result2 = agent.run(query2)This demonstration effectively highlights the agent’s ability to resolve complex computational inquiries and validate its findings rigorously.

In essence, the integration of LangChain’s ReAct agent framework and Anthropic’s Claude API provides a robust system that not only executes Python code but also validates its outputs. This closed-loop mechanism strengthens the reliability of results, making it a valuable asset for data analysis, algorithm testing, and machine learning pipelines.