Understanding Affordances in Animal Perception

When psychologist James Gibson introduced the concept of "affordances," he posed a groundbreaking idea: perception cannot be fully grasped without acknowledging the intrinsic connection between an organism and its environment. Gibson argued that the world isn’t just a backdrop observed by animals; it is intricately experienced through the lens of their unique perspectives. This interaction between an organism’s body, senses, and surroundings forms the basis of ecological perception, where the experience of the world is continuously shaped by the organism’s interactions within it.

The Evolution of Ecological Neuroscience

The implications of Gibson’s ideas have permeated various fields, especially psychology and ethology, and are now gaining traction in neuroscience. Researchers are increasingly recognizing that studying neural functions or behaviors detached from their ecological context offers limited insights. This gives rise to the emerging field of ecological neuroscience, which emphasizes that to comprehend how an animal’s brain operates, one must first understand the ecological environment it inhabits. This understanding includes the animal’s typical movements and the sensory experiences they evoke.

Visual Processing and Evolutionary Context

A fascinating aspect of this ecological lens highlights how differences in visual processing among species can often be traced back to their specific ecological niches. Neural peculiarities, like varying neuron allocations for visual features (such as color coding in the retina and V1) or asymmetries in visual field specialization, reveal how evolutionary pressures have sculpted each brain to perceive and interact efficiently within its environment. As Horace Barlow famously put it, “A wing would be a most mystifying structure if one did not know that birds flew.”

Seeing Through the Animal’s Eyes

To delve deeper into the understanding of animal behavior and brain function, we must first grasp how animals see their world. Reconstructing an animal’s ecological niche from its perspective is crucial. Here, generative artificial intelligence (AI) stands out as a pioneering tool. By creating virtual environments, it allows scientists to visualize and hypothesize how different animals experience their surroundings. This bridging of perception and environment aligns perfectly with Gibson’s vision of inseparability between an organism and its context.

The Role of AI in Vision Research

The interplay between neuroscience and AI, particularly in vision research, exemplifies the benefits of this ecological perspective. Artificial neural networks, when trained on extensive datasets, have proven to be effective models for visual processing. Notably, the advancements in artificial neural network models—specifically, those modeling the primate ventral visual pathway responsible for object recognition—have been propelled by datasets like ImageNet, which contain a vast array of photographs that approximate typical human visual experiences.

Limitations of Dataset-Driven Models

However, the limitations of datasets like ImageNet have become increasingly apparent. These datasets reflect a curated snapshot of human life, lacking the embodied experience of moving through the world. They provide static images of how humans observe their environment, but they fail to capture the nuances of how perception occurs through movement. As models trained on these limited datasets reach performance plateaus, the gaps between their theoretical representations and neural data begin to emerge. Indicators such as perceptual robustness and behavioral generalization highlight that crucial elements of natural visual experience are often missing.

The Need for Ecological Understanding

What appears to be missing from such datasets could be the essence of ecology itself—the vibrant interaction between a moving animal and its environment. While ImageNet presents a static view of the world, real-world perception unfolds dynamically, molded by continuous feedback as organisms move and interact. For instance, a frog’s visual world isn’t defined by unchanging images of flies but by the intricate motion patterns of its prey within the dynamic backdrop of its habitat.

Key Factors Shaping Sensory Experience

Several factors contribute to the sensory statistics encountered by an animal:

-

The Environment: The visual landscapes of a city-dwelling human, a desert lizard, and a forest bird vary vastly in structure and dynamics.

-

Physical Attributes of the Animal: The form of the animal—whether a rat with its ground-level perspective or a monkey with a higher aerial view—determines how it perceives its environment.

- Movement Patterns: The characteristic ways in which an animal explores its surroundings shape its sensory inputs. For example, a rat’s exploratory movements contrast sharply with a tree shrew’s rapid movements through the trees, leading to differing visual experiences even in similar settings.

These three elements combine to form what is known as the ecological niche, which depicts the dynamic interplay between an animal and its world. Capturing this triad experimentally poses significant challenges, as the task of measuring real-time sensory data alongside continuous behavioral and environmental interactions remains a frontier in neuroscience.

Generative AI: A New Frontier

Advancements in generative AI provide a transformative opportunity to tackle these challenges. Recent developments in video and multimodal generative models, particularly diffusion-based systems, enable researchers to create rich, dynamic visual scenes. These models leverage a diverse array of real-world imagery, mimicking the complexities of physical dynamics found in nature.

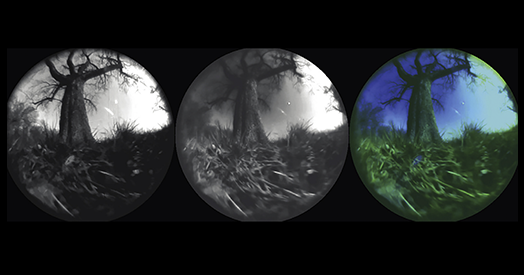

Simulating Animal Perspectives

One of the most exciting aspects of these AI technologies is their capacity for guidance. Researchers can specify what elements should be included in a scene and simulate movement through it. This enables the creation of virtual environments that approximate how different species would perceive the world while they traverse various terrains. Historical descriptions and field studies can help inform these simulations, producing realistic reconstructions of an animal’s visual experience as it navigates its surroundings.

Bridging the Gap in Neuroscience

The rapid evolution of generative AI technologies may allow scientists to bridge the long-standing divide between perception and environment. In essence, these developments could lead to an enriched understanding of how animals perceive their worlds—a vision closely aligned with Gibson’s original insights into the inseparable relationship between organisms and their habitats. As this field progresses, the potential for innovative research approaches that enlighten our understanding of cognition, behavior, and perception in the animal kingdom is indeed vast.